P Python Pipeline Project information Project information Activity Labels Members Repository Repository Files Commits Branches Tags Contributors Graph Compare Locked Files Issues 0 Issues 0 List Boards Service Desk Milestones Iterations Merge requests 0 Merge requests 0 Requirements Requirements CI/CD CI/CD Pipelines Jobs Schedules Test Cases. Oct 18, 2018.gitlab-ci.yml results from pipeline view.gitlab-ci.yml results from job view.gitlab-ci.yml results from mergerequest view; Gitlab ships with its own free CICD which works pretty well. This post will give you an example of the CICD file.gitlab-ci.yml for a Python project running on Gitlab Windows runner. Some docs on the Internet.

Part 2, presenting a pattern for a CI/CD pipeline implementation using GitlabCI for Continuous Integration and Continuous Deployment of Python projectsdistributed as wheel packages using PyPI.

As a general rule in my .gitlab-ci.yml implementations I try to limit bashcode to glue logic; just creating a bridge between the Gitlab pipeline“session” and some utility that forms the core of the job. As a rule of thumbI would say to limit bash code to no more than 5 lines; once you’re over thatthen it’s time to rethink and start implementing the scripting in a morestructured and maintainable language such as Python.

There are some people out there who have created unit test frameworks for bash,but the fundamental language limitations of bash remain and in my opinion theselimitations create numerous long term problems for maintainability that arebest avoided by the use of a more capable language. My personal preference atthis time is Python, but there are other choices that may be more suitable foryour environment and projects. For example if your projects are based ingolang then that may also be a suitable choice for your tooling, or similarlyfor other languages provided they have plenty of useful libraries for buildingpipeline related utilities. Just keep in mind that you want as few differentlanguages for developers to learn as possible; although this should not beconsidered justification for writing your pipeline tooling in C - that, Ithink, would just be masochism.

One other rule for pipelines is that developers should be able to run the samecode as Gitlab CI to test the pipeline jobs for themselves. This is anotherreason to minimize the lines of code in the job definitions themselves (andthus the bash code). In a Makefile based project it’s easy to set up thepipeline jobs so they just call a make target which is very easy for developersto replicate, although care must be taken with pipeline context in the form ofenvironment variables. In a Python project instead of introducing anotherDocker image dependency to acquire make, in this project I’ve just use Pythonscript files. Another consideration for developers running pipeline jobs that Ihave not explored much, is that Gitlab has the capability torun the pipeline jobs locally.

Project structure

It’s probably useful to have a quick outline of the project folder structure sothat you have an idea of the file system context the pipeline is operating in.

The dist folder is where flit deposits the wheel packages it builds. Thescripts folder is for pipeline related scripts. The tests folder is whereunit and integration tests are located; separate from the main project sourcecode. Having the tests folder separate from the main project source code is apractice I’ve only recently adopted but found it to be quite useful; becausethe tests are separate the import syntax naturally references the projectsource paths explicitly so you usually end up testing the “public” or“exported” interface of modules in the project.In a more complex project italso becomes easier to distinguish and separate unit tests from integrationtests from business tests. Finally packaging becomes easier; when tests areincluded in the main source tree in respective tests folders then flitautomatically packages them in the wheel. The tests are not very useful in thewheel package and make it a little larger (maybe lots larger if your tests havedata files as well).

Pipeline stages

In the download_3gpp Gitlab CI pipelineI’ve defined four stages pre-package, package, test and publish. InGitlab CI stages are executed in sequentially in the defined order and ifnecessary, dependency artifacts can be passed from a preceding stage to asubsequent stage.

The pre-package stage is basically “stuff that needs to be done beforepackaging” because the packaging (or subsequent stages) depends on it. Thetest stage must come after the package stage because the test stageconsumes the wheel package generate by the package stage. Once all the testshave passed then the publish stage can be executed.

Package release identity management

For Python package release identification I use this code snippet in the main__init__.py file

The VERSION file is committed to source control with the content “0.0.0”. Thismeans that by default a generated package will not have a formal releaseidentity which is important for reducing ambiguity around which packages areactually formal releases. Developers can generate their own packages manuallyif needed and the package will acquire the default “0.0.0” release id whichclearly communicates that this is not a formal release.

In principal, for any release there must be a single, unique package thatidentifies itself as a release package using the semantic version for thatrelease. PerPEP-491, forPython wheel packages the package name takes the form <package name>-<PEP-440 release id>-<language tag>-<abi tag>-<platform tag>

The update_version job changes the VERSION file content slightly dependingon whether the pipeline is a tagged release, a “non-release” branch (master orfeature branch), or a special “dryrun” release tag. The modified VERSION fileis then propagated throughout the pipeline as a dependency in subsequent stages.

The dryrun tag takes the form <semantic version>.<pipeline number>-dryrun<int>.The intention of the dryrun tag is to enable as much as possible, testing thepipeline code for a release without actually doing the release. In this casethe dryrun tag acquires the semantic version part of the tag and appends thepipeline number as well to indicate that this is not a formal release. Inaddition we will see later that publishing the package does not occur for adryrun tag. The artifacts generated by a dryrun pipeline are still availablefor download and review via theartifacts capabilityof Gitlab.

For any pipeline that is not a tagged release the default “0.0.0” semanticversion is used along with the pipeline number. This clearly indicates that thegenerated package is not a formal release, but is an artifact resulting from apipeline.

Here is alink to update_version.pyfor your review. I’ve tried to keep this script extremely simple so that it iseasy to maintain, and contrary to my normal practice it does not have anytests. If it evolves to be any more complicated than it is now then it willneed unit tests and probably have to be migrated to it’s repo with packaging.

Package generation

The download_3gpp project presently only generates the wheel package forinstallation, but the packaging stage could also include documentation andother artifacts related to the complete delivery of the project. Thedownload_3gpp project only has the README.rst documentation for the moment.

Run tests

In the pipeline, after the package stage is the test stage. In myexperience this tends to be the busiest stage for Python projects as variousdifferent types of tests are run against the package.

Test package install

The first test is to try installing the generated package to ensure thatflit is doing it’s job correctly with your pyproject.toml configuration:

It’s a fairly nominal test, but it is a valid test. Considering how many timesnow I’ve seen or heard about C/C++ code being distributed that literallydoesn’t compile I think it’s important to include even the most nominal testsin your pipeline, after all just compiling your code, even once, would be apretty nominal test.

Run unit tests (and integration, business tests)

This project doesn’t have integration or business tests so there is only onejob. If there were integration and business tests then I would create separatejobs for them so that the tests all run in parallel in the pipeline.

Test coverage analysis

I prefer to run the various coverage analyses in separate jobs, although theycould arguably be consolidated into a single job. If I recall correct therun-coverage cannot be consolidated because the stdout that is captured forthe Gitlab coverage reporting is suppressed when outputting to html or xml, sohaving all separate jobs seems cleaner to me.

The run-coverage-threshold-test job fails the pipeline if the test coverageis below a defined threshold. My rule of thumb for Python projects is that testcoverage must be above 90% and preferably above 95%. I usually aim for 95% butdepending on the project sometimes that needs to be relaxed a little to 90%.

Test code style formatting

Here’s where I’ve run into some problems. The implementations of black andisort sometimes havecompeting interpretations of code style formatting.If you’re lucky, your code won’t trigger the interaction, but most likely iteventually will.

Adding the isort configuration to pyproject.toml does help but doesn’t solvethe problem completely in my experience. I’ve ended up having to accept failurein the isort formatting job which further complicates things because caremust also be taken when running black and isort manually to acquire theformatting before commit; the black formatting must be run last to ensurethat it’s formatting takes precedence over isort.

A bit frustrating right now, but hopefully it will be resolved in the not toodistant future.

Publish to PyPi

In the past I’ve had the publish job only run on a release tag using the onlyproperty in the job. The problem with this is that the publish code is onlyrun on release, so if you make changes to the release job in a feature branchthen you won’t really know if the changes are broken until you actuallyrelease. Too many times we’ve had the release meeting, everyoneagrees that we’re ready to release, tag the release and the build fails. Thiscreates considerable sense of urgency at the eleventh hour (as it should)when everyone is now expecting the release artifacts to just emerge. Thiscan be avoided by ensuring that as much as possible of the release code isrun on the feature branch without actually publishing a release.

In the case of this pipeline, the only difference in the publishing job betweena release pipeline and non-release pipeline is the use of the flit publishcommand. In my experience it’s pretty easy to get this right; it’s much morelikely that you’ve forgotten to update job dependencies or some other “pipelinemachinery” which will now be exercised in a feature branch before you get to arelease.

Conclusions

I’ve described a set of Gitlab-CI job patterns for a simple Python project.Hope it’s useful.

For a more complex project that includes docstring documentation and Sphinxdocumentation then other considerations would include:

- testing docstring style and running docstring test code

- publishing documentation to readthedocs

Introduction

This blog post describes how to configure a Continuous Integration (CI) process on GitLab for a python application. This blog post utilizes one of my python applications (bild) to show how to setup the CI process:

In this blog post, I’ll show how I setup a GitLab CI process to run the following jobs on a python application:

- Unit and functional testing using pytest

- Linting using flake8

- Static analysis using pylint

- Type checking using mypy

What is CI?

To me, Continuous Integration (CI) means frequently testing your application in an integrated state. However, the term ‘testing’ should be interpreted loosely as this can mean:

- Integration testing

- Unit testing

- Functional testing

- Static analysis

- Style checking (linting)

- Dynamic analysis

To facilitate running these tests, it’s best to have these tests run automatically as part of your configuration management (git) process. This is where GitLab CI is awesome!

In my experience, I’ve found it really beneficial to develop a test script locally and then add it to the CI process that gets automatically run on GitLab CI.

Getting Started with GitLab CI

Before jumping into GitLab CI, here are a few definitions:

– pipeline: a set of tests to run against a single git commit.

– runner: GitLab uses runners on different servers to actually execute the tests in a pipeline; GitLab provides runners to use, but you can also spin up your own servers as runners.

– job: a single test being run in a pipeline.

– stage: a group of related tests being run in a pipeline.

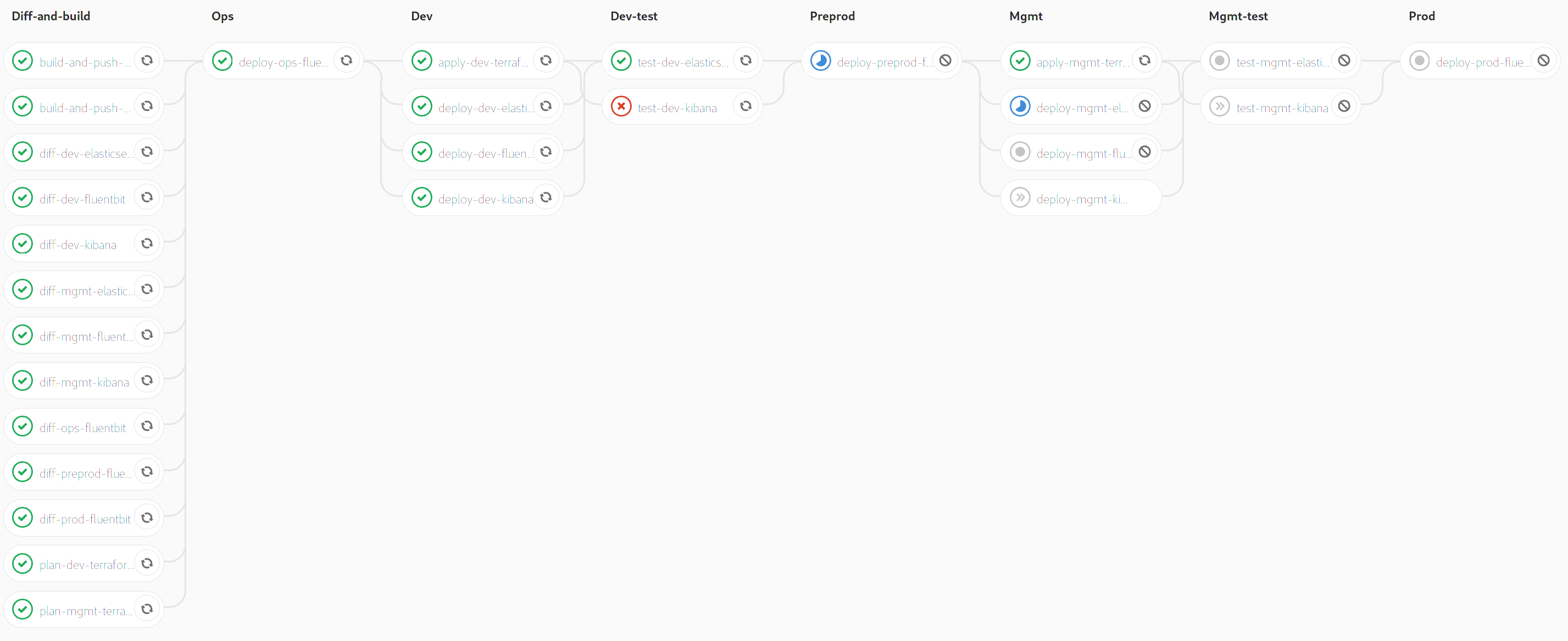

Here’s a screenshot from GitLab CI that helps illustrate these terms:

GitLab utilizes the ‘.gitlab-ci.yml’ file to run the CI pipeline for each project. The ‘.gitlab-ci.yml’ file should be found in the top-level directory of your project.

While there are different methods of running a test in GitLab CI, I prefer to utilize a Docker container to run each test. I’ve found the overhead in spinning up a Docker container to be trivial (in terms of execution time) when doing CI testing.

Creating a Single Job in GitLab CI

The first job that I want to add to GitLab CI for my project is to run a linter (flake8). In my local development environment, I would run this command:

This command can be transformed into a job on GitLab CI in the ‘.gitlab-ci.yml’ file:

This YAML file tells GitLab CI what to run on each commit pushed up to the repository. Let’s break down each section…

The first line (image: “python: 3.7”) instructs GitLab CI to utilize Docker for performing ALL of the tests for this project, specifically to use the ‘python:3.7‘ image that is found on DockerHub.

The second section (before_script) is the set of commands to run in the Docker container before starting each job. This is really beneficial for getting the Docker container in the correct state by installing all the python packages needed by the application.

The third section (stages) defines the different stages in the pipeline. There is only a single stage (Static Analysis) at this point, but later a second stage (Test) will be added. I like to think of stages as a way to group together related jobs.

The fourth section (flake8) defines the job; it specifies the stage (Static Analysis) that the job should be part of and the commands to run in the Docker container for this job. For this job, the flake8 linter is run against the python files in the application.

At this point, the updates to ‘.gitlab-ci.yml’ file should be commited to git and then pushed up to GitLab:

GitLab Ci will see that there is a CI configuration file (.gitlab-ci.yml) and use this to run the pipeline:

This is the start of a CI process for a python project! GitLab CI will run a linter (flake8) on every commit that is pushed up to GitLab for this project.

Running Tests with pytest on GitLab CI

When I run my unit and functional tests with pytest in my development environment, I run the following command in my top-level directory:

My initial attempt at creating a new job to run pytest in ‘.gitlab-ci.yml’ file was:

However, this did not work as pytest was unable to find the ‘bild’ module (ie. the source code) to test:

The problem encountered here is that the ‘bild’ module is not able to be found by the test_*.py files, as the top-level directory of the project was not being specified in the system path:

The solution that I came up with was to add the top-level directory to the system path within the Docker container for this job:

With the updated system path, this job was able to run successfully:

Final GitLab CI Configuration

Here is the final .gitlab-ci.yml file that runs the static analysis jobs (flake8, mypy, pylint) and the tests (pytest):

Gitlab Merge Request Pipeline

Here is the resulting output from GitLab CI:

Gitlab Pipeline Run Python Script Examples

One item that I’d like to point out is that pylint is reporting some warnings, but I find this to be acceptable. However, I still want to have pylint running in my CI process, but I don’t care if it has failures. I’m more concerned with trends over time (are there warnings being created). Therefore, I set the pylint job to be allowed to fail via the ‘allow_failure’ setting: